A Low-Power Horizon Detection Chip

(Note: Because the project's

writeup has not been published yet, I am only providing a limited

information about the project)

Unmanned micro-aerial vehicles are rapidly

being developed for use as a low-cost, portable, aerial surveillance platform

for semi-autonomous operation. While they

are successfully achieving flight, the sensors needed for autonomous flight (in

contrast to long-range navigation) are lacking.

Obstacle avoidance and flight stability remain a problem for such small

vehicles with tiny weight and power budgets.

Their small size makes them susceptible to tiny wind gusts, making the

speed of processing critical for stability.

While many visual motion approaches to stabilizing aerial

vehicles with low-power chips are being pursued (e.g. [1, 2]), detection of the horizon is

also desirable for high-altitude pitch and roll stabilization. Several low sensor-count horizon sensing

systems have been developed for assisting aircraft pilots that utilize contrast

in the infrared spectrum [3] or visible light [4]. In recent years, a team from the University

of Florida (UF) has demonstrated an automatic visual-horizon finding algorithm

operating on a high-speed computer on the ground that receives a transmitted color

video-feed from the airplane [5]. We have devised a similar algorithm that uses

an optimization approach embedded in an analog VLSI vision chip to find the best

visual horizon and provide a measure of confidence. The VLSI implementation has the potential for

real-time, low-power performance and operation over a wider dynamic range of

image intensities.

In this work, we have designed and tested a low-power, real-time visual

horizon sensor for use in stabilizing miniature aircraft with respect to pitch

and roll in moderate-to-high altitude flight.

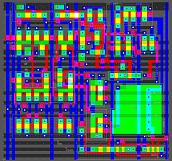

This sensor currently incorporates a 12x12 photoreceptor array (above) and finds a

best-fit horizon line based on image intensity.

The sensor includes a “confidence-level” output for a flight control

system to detect poor sensing conditions.

The chip was fabricated in a commercially-available 0.5mm CMOS process and operates on less than 2.5

milliwatts with a 5V power supply.

Each pixel of the 12x12 array

contains a photoreceptor (green square in the bottom right corner),

circuits that compute whether the pixel is a 'sky', or 'ground' pixel,

circuits that compute the average image intensity in each class,

circuits that decide whether the pixel is misclassified, and circuits

that compete globally to change the horizon vector.

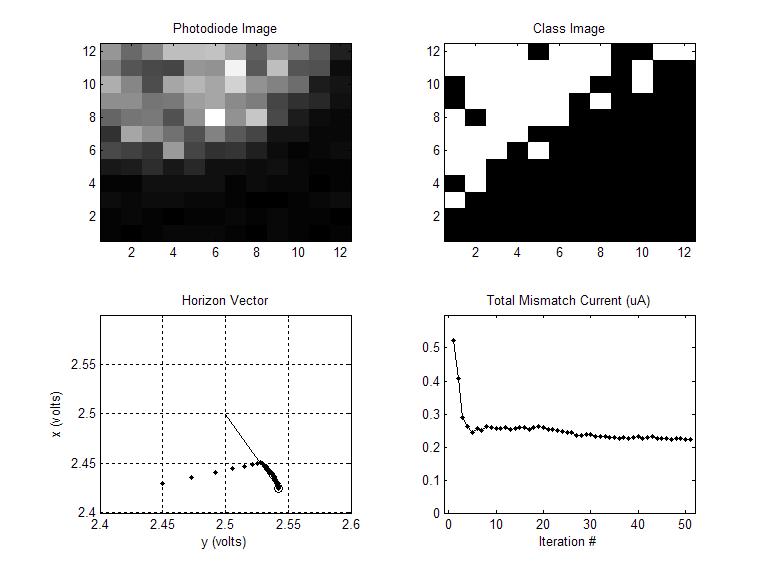

(click on figure to see detail) In the example to the left, an image consisting primarily of two

intensity regions (bright and dark) is projected onto the chip

surface. A global horizon vector is computed by the chip and each

pixel can thus reports its classification into sky or ground (top-right

panel of the figure). The binary image shown is the final

result.

The bottom-left panel of the figure to the left is the endpoint of the

horizon vector (which is normal to the horizon line) as it changes over

time. The line leading from the center of the image is the final

vector solution.

Each each step, the total number of pixels that consider themselves to

be misclassified as sky or ground is added and reported. This can

be taken as a measure of confidence of the horizon result (low numbers

means high confidence.). For perfectly linear horizons,

this number could potentially reach as low as zero, but for more

realistic images, even an excellent estimate for the horizon will not

be able to fit a line to make all pixels "happy".

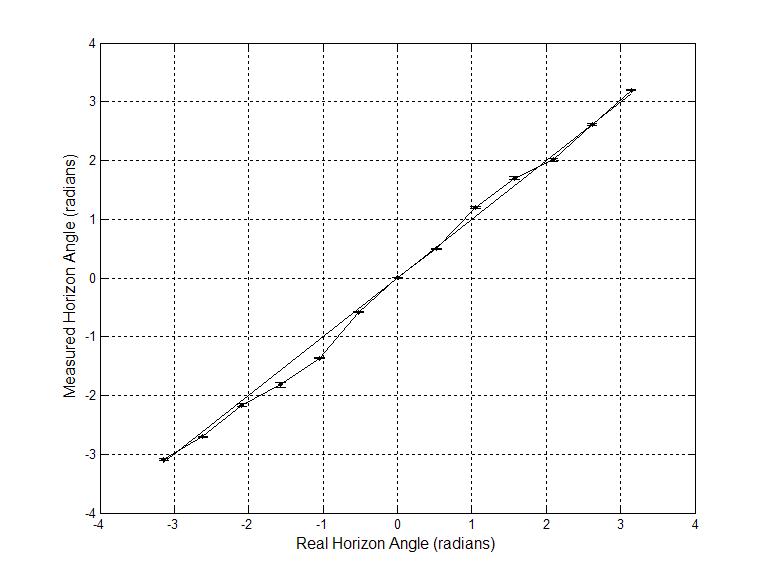

(Click on figure to see detail) We

also measured the accuracy of the horizon measurement by projecting a

calibrated horizon image onto the chip and interpreting the chip's

output voltages (without correction) as a horizon angle.

(Click on figure to see detail) We

also measured the accuracy of the horizon measurement by projecting a

calibrated horizon image onto the chip and interpreting the chip's

output voltages (without correction) as a horizon angle.

References

[1] T. Netter and N. Franceschini, "Towards

UAV Nap-of-the-Earth flight using optical flow," Advances in Artificial Life, Proceedings, vol. 1674, pp. 334-338,

1999.

[2] W. E.

Green, P. Y. Oh, K. Sevcik, and G. L. Barrows, "Autonomous Landing for

Indoor Flying Robots Using Optic Flow," presented at ASME Intl Mech. Engr.

Congress, Washington D.C., 2003.

[3] B.

Taylor, C. Bil, and S. Watkins, "Horizon Sensing Attitude Stabilization: A

VMC Autopilot," presented at 18th Intl. UAV Systems Conference, Bristol,

UK, 2003.

[4] Futaba,

"Futaba(R) PA-2: Pilot Assist Link Auto Pilot System," (http://www.futaba-rc.com/radioaccys/futm0999.html),

2004.

[5] S. M.

Ettinger, M. C. Nechyba, P. G. Ifju, and M. Waszak, "Vision-guided flight

stability and control for micro air vehicles," Advanced Robotics, vol. 17, pp. 617-640, 2003.